I just returned from a great workshop on “studying digital cultures” organized by Jutta Haider, Sara Kjellberg, Joost van de Weijer & Marianne Gullberg (from HEX, the Humanities Lab, and the Department of Arts and Cultural Sciences). They invited me and Kim Holmberg (Department of Information Studies, Åbo Akademi University/ Finland) to present tools and methods to study digital cultures. Drawing on our own work we presented, explained and discussed the tools we used and for what purposes.

Referring to his dissertation work Kim presented tools, which have been developed by Mike Thelwall, the SocSciBot and LexiURL in particular. Since I’ve been reading quite some of Thelwall’s publications I found it highly interesting to learn more about the technicalities involved in these link network visualization methods. If you’re willing to invest some time to understand the various tools (and have a PC since the tools don’t work on macs) you could do some pretty neat stuff with them (including YouTube searches with LexiURL). The advantage of these tools is that they are free to use; the downside of LexiURL is that it only includes Bing searches. Besides serious research tools Kim also showed us some fun stuff including the Twitter Sentiment Analysis Tool or the We Feel Fine websites, that enables you to explore human emotions (both didn’t seem to work properly though, but try it for yourself).

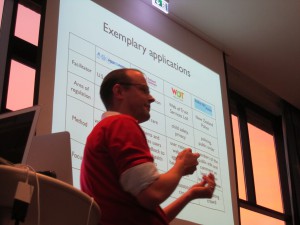

Referring to his dissertation work Kim presented tools, which have been developed by Mike Thelwall, the SocSciBot and LexiURL in particular. Since I’ve been reading quite some of Thelwall’s publications I found it highly interesting to learn more about the technicalities involved in these link network visualization methods. If you’re willing to invest some time to understand the various tools (and have a PC since the tools don’t work on macs) you could do some pretty neat stuff with them (including YouTube searches with LexiURL). The advantage of these tools is that they are free to use; the downside of LexiURL is that it only includes Bing searches. Besides serious research tools Kim also showed us some fun stuff including the Twitter Sentiment Analysis Tool or the We Feel Fine websites, that enables you to explore human emotions (both didn’t seem to work properly though, but try it for yourself).

© photo credits: digital cultures blog

Compared to the methods Kim presented the tools developed by Richard Rogers and his team are user-friendly and easy to use. Both the Issuecrawler and related tools, such as the Issue Discovery tool or the Google scraper, are ready-made software packages that allow the user to create link network visualizations by clicking on a number of settings (which make them appealing to social sciences and humanities scholars with limited technical skills and less appealing to people, who want to modify/ adapt the tools for their own research purposes, as I’d assume). To really understand what you see on the Issuecrawler link network maps, however, also requires a certain understanding of the software and the algorithm it incorporates, which I tried to explain during the workshop by walking participanst through the software. The log-in for the Issuecrawler is easy to get and 10 crawls are for free. After that individual terms of use may be negotiated depending on whether researchers have funding or not. For more advanced users I suggested Gephi.org, a network visualization tool free to use with great capacities (ask Bernhard Rieder for details, he knows all about it). Finally, we played around with Wordle, a tool that creates beautiful issue clouds out of text (with one mouse click).

Compared to the methods Kim presented the tools developed by Richard Rogers and his team are user-friendly and easy to use. Both the Issuecrawler and related tools, such as the Issue Discovery tool or the Google scraper, are ready-made software packages that allow the user to create link network visualizations by clicking on a number of settings (which make them appealing to social sciences and humanities scholars with limited technical skills and less appealing to people, who want to modify/ adapt the tools for their own research purposes, as I’d assume). To really understand what you see on the Issuecrawler link network maps, however, also requires a certain understanding of the software and the algorithm it incorporates, which I tried to explain during the workshop by walking participanst through the software. The log-in for the Issuecrawler is easy to get and 10 crawls are for free. After that individual terms of use may be negotiated depending on whether researchers have funding or not. For more advanced users I suggested Gephi.org, a network visualization tool free to use with great capacities (ask Bernhard Rieder for details, he knows all about it). Finally, we played around with Wordle, a tool that creates beautiful issue clouds out of text (with one mouse click).

After the presentations participants tried to make use of the tools for their own research purposes. It was a mixed crowd with different research interests, which was fun. Further, Joost van de Weijer showed us the Lund Humanities Lab, which appeared to be really different from the Umeå HUMlab since it mainly focuses on eye tracking, motion tracking and similar technologies (it was impressive to see their “experiment” rooms!). All in all we had a great time and a delicious dinner after a long day of digital work 😉 Thanks again to the organizers for having made this event happen & all the participants for having shared their ideas with us! Finally, I want to thank Olof Sundin, who gave me the opportunity to speak about digital methods in his methods seminar, and Karolina Lindh, who let me and Mike Frangos stay at her gorgeous apartment in Lund. You guys really made my stay – as always – a great pleasure! Take care & hope to see you again soon!!!