Two panel discussions might be worth mentioning: 1) I participated in this year’s Joint Academy Day of the Austrian Academy of Sciences (organized together with the Canadian Academy of Sciences). Our panel was concerned with the Covid-19 crisis and its social, economic, and policy implications. The video can be watched here. 2) The Austrian newspaper “Wiener Zeitung” organized a panel discussion on the topic of “Algorithms – friend or enemy?”; the link to the video can be found here.

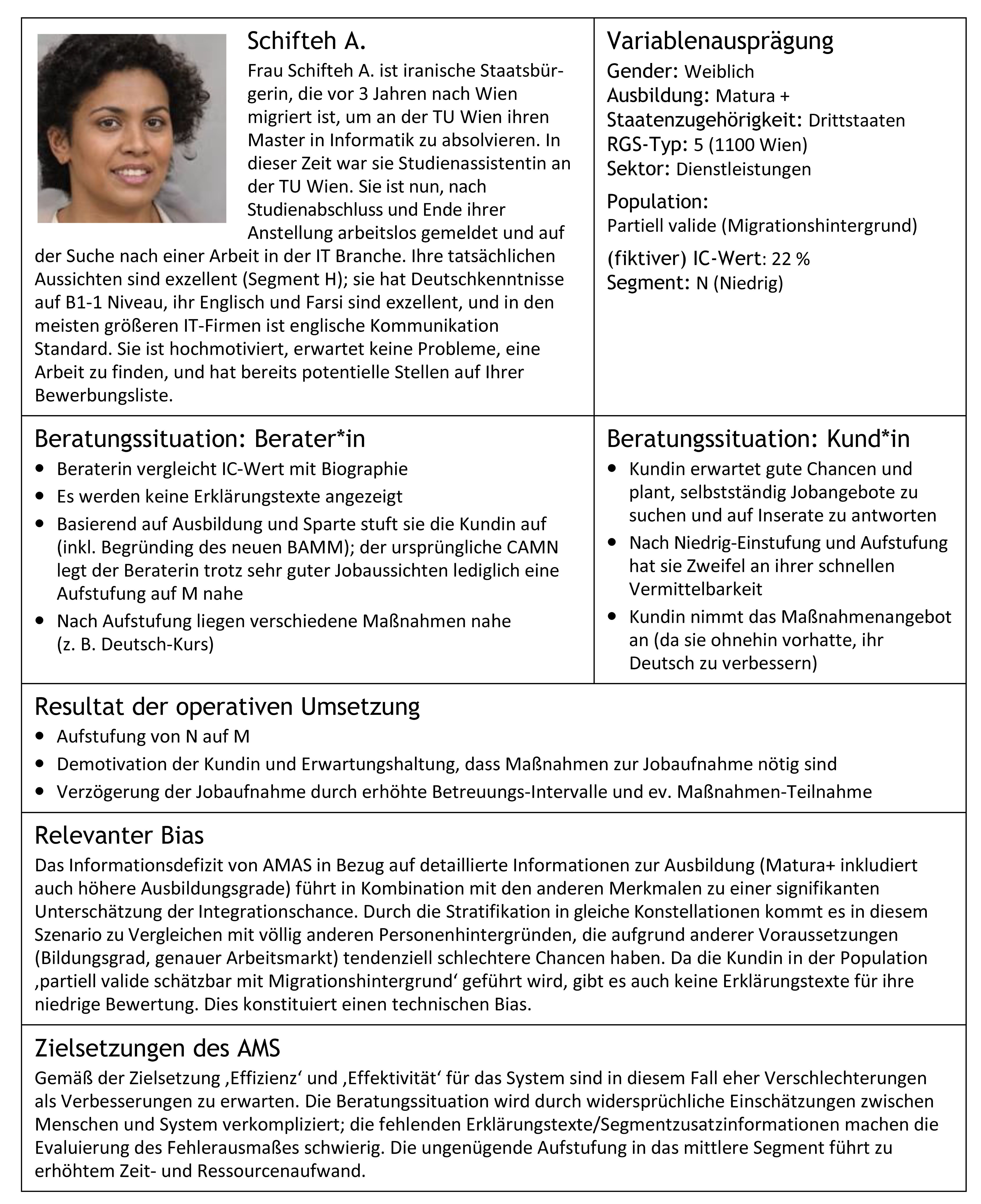

ams-algorithmus_fiktives fallbeispiel “Shifteh A.”

Doris Allhutter und ich haben einen Beitrag für den Blog “Arbeit und Wirtschaft” zu unserer Studie zum sogenannten “AMS-Algorithmus” verfasst (auf dt.). Der Blog Post ist hier online abrufbar; die Studie (gemeinsam mit Florian Cech, Fabian Fischer und Gabriel Grill) ist hier zu finden. Aus Platzgründen konnte das im Blog Post verwendete “Szenario” einer fiktiven Arbeitssuchenden – Shifteh A. – leider nicht zur Gänze abgebildet werden. Deshalb möchte ich es hier veröffentlichen:

Nachdem wir im Rahmen unserer Studie weder einen direkten Zugang zu AMAS (Arbeitsmarkt-Chancen-Assistenzsystem), noch zu den dafür verwendeten Daten hatten sind die Berechnungen des Szenarios fiktiv. Sie können keine tatsächlichen IC-Werte (Integrationschancen-Werte) oder Fehlerraten berechnen, aber sie können die mit AMAS verbundenen Probleme im Zusammenspiel von technischem Bias und sozialer Praxis (auf Basis der uns vom AMS zur Verfügung gestellten Dokumente & Materialien) plausibel illustrieren. In unserem Bericht haben wir drei weitere Szenarien verfasst, die alle auf ein konkretes Problem in der praktischen Handhabung von AMAS eingehen. Sie können hier im Detail nachgelesen werden.

Nachdem wir im Rahmen unserer Studie weder einen direkten Zugang zu AMAS (Arbeitsmarkt-Chancen-Assistenzsystem), noch zu den dafür verwendeten Daten hatten sind die Berechnungen des Szenarios fiktiv. Sie können keine tatsächlichen IC-Werte (Integrationschancen-Werte) oder Fehlerraten berechnen, aber sie können die mit AMAS verbundenen Probleme im Zusammenspiel von technischem Bias und sozialer Praxis (auf Basis der uns vom AMS zur Verfügung gestellten Dokumente & Materialien) plausibel illustrieren. In unserem Bericht haben wir drei weitere Szenarien verfasst, die alle auf ein konkretes Problem in der praktischen Handhabung von AMAS eingehen. Sie können hier im Detail nachgelesen werden.

AMS-algorithm_final report out! yay!

Finally, our report of the project on the so-called AMS-algorithm is out!!! YAY!!! It was a lot of work, but it was definitely worth it! It is an in-depth sociotechnical analysis and deconstruction of the algorithm the Austrian public employment service (AMS) is planning to roll out all over Austria starting from January 2021. The algorithm poses several severe challenges on both the institutional level and the larger societal level, as we – Doris Allhutter, Florian Cech, Fabian Fischer and Gabriel Grill – argued in our report. We’d like to thank the Upper Austrian Chamber of Labor (AK OÖ) that financed our study and particularly Dennis Tamesberger for his support throught the process. Here you can download the full report.

The report triggered lots of media coverage, e.g. in APA Science or at orf.at. We also did some interviews, e.g. for the Austrian Academy of Sciences, Futurezone or the radio broadcast Ö1 (all in German). The final report is in German, but english publications will (hopefully) follow SOON! For now I’d like to point you to the english publications in New Frontiers in Big Data that we published a year ago (only based on publicly available materials back then, but still relevant in its argumentation):

The report triggered lots of media coverage, e.g. in APA Science or at orf.at. We also did some interviews, e.g. for the Austrian Academy of Sciences, Futurezone or the radio broadcast Ö1 (all in German). The final report is in German, but english publications will (hopefully) follow SOON! For now I’d like to point you to the english publications in New Frontiers in Big Data that we published a year ago (only based on publicly available materials back then, but still relevant in its argumentation):

Allhutter, D., Cech, F., Fischer, F., Grill, G. & Mager, A. (2020) “Algorithmic Profiling of Job Seekers in Austria: How Austerity Politics Are Made Effective”, Frontiers in Big Data (Special Issue Critical Data and Algorithm Studies), full text; open access here.

NM&S special issue “future imaginaries”

The special issue I guest-edited together with Christian Katzenbach is going to be (finally) published in February 2021. I’d like to thank Steve Jones for his support throughout this rather long, but finally rewarding process. It’s a pity SAGE won’t publish an online first version of the articles, but well, they apparently agreed to do so for future New Media & Society special issues, which I’d highly appreciate!!! Thanks also to all our authors for their fine contributions and their patience! If you’d like to read our editorial “Future imaginaries in the making and governing of digital technology: Multiple, contested, commodified” I’d like to point you to our preprint version here. Let us know what you think about our interpretations and advancements of the concept “sociotechnial imaginaries” by Sheila Jasanoff and related analytical tools to capture, understand, and investigate future imaginaries in the making and governing of digital technology. It’s an ongoing debate we wanted to contribute to with this special issue and its excellent contributions..

deus ex machina?

Unter diesem Titel habe ich kürzlich eine Buchrezension zu Roberto Simanowskis Buch “Todesalgorithmus. Das Dilemma der künstlichen Intelligenz” für Soziopolis geschrieben. Kurz zusammen gefasst: Das Buch, das sich mit Künstlicher Intelligenz (KI) aus moralphilosophischer Sicht beschäftigt, ist eine Empfehlung! Die spekulative Herangehensweise zudem mitunter auch sehr unterhaltsam! – So etwa die allmächtige Öko-KI, die den Menschen (und unseren Planeten) vor sich selbst retten wird. Die gesamte Rezension ist hier zu lesen.

Unter diesem Titel habe ich kürzlich eine Buchrezension zu Roberto Simanowskis Buch “Todesalgorithmus. Das Dilemma der künstlichen Intelligenz” für Soziopolis geschrieben. Kurz zusammen gefasst: Das Buch, das sich mit Künstlicher Intelligenz (KI) aus moralphilosophischer Sicht beschäftigt, ist eine Empfehlung! Die spekulative Herangehensweise zudem mitunter auch sehr unterhaltsam! – So etwa die allmächtige Öko-KI, die den Menschen (und unseren Planeten) vor sich selbst retten wird. Die gesamte Rezension ist hier zu lesen.

ARS electronica workshop

This year I have the pleasure to give a workshop together with Hong Phuc Dang as part of the ARS Electronica Festival 2020. The title is How to create your own AI device with SUSI.AI – An Open Source Platform for Conversational Web and its part of an overall event Waltraud Ernst and colleagues from the University of Linz have organized. The whole event is dealing with bias and discrimination in algorithmic systems: “How to become a high-tech anti-discrimination activist collective” with awesome keynotes by Lisa Nakamura and Safiya Noble; more infos can be found on the ARS/ Uni Linz website. There you can also register if you’re interested in participating! I’m already looking forward to this event!!! 🙂

This year I have the pleasure to give a workshop together with Hong Phuc Dang as part of the ARS Electronica Festival 2020. The title is How to create your own AI device with SUSI.AI – An Open Source Platform for Conversational Web and its part of an overall event Waltraud Ernst and colleagues from the University of Linz have organized. The whole event is dealing with bias and discrimination in algorithmic systems: “How to become a high-tech anti-discrimination activist collective” with awesome keynotes by Lisa Nakamura and Safiya Noble; more infos can be found on the ARS/ Uni Linz website. There you can also register if you’re interested in participating! I’m already looking forward to this event!!! 🙂

4s/EASST conference, virtual prague

Tomorrow the huge 4S/EASST conference starts, albeit virtually. It’s the first ZOOM conference I’m attending at this scale and I’m really curious how that will work out! 😉 I’m involved in three sessions:

Tomorrow the huge 4S/EASST conference starts, albeit virtually. It’s the first ZOOM conference I’m attending at this scale and I’m really curious how that will work out! 😉 I’m involved in three sessions:

- First, I’m contributing a paper on my alternative search engines research to the “grassroot innovation” session that nicely fits the scope of my paper. My first analysis of the YaCy/ SUSI.AI research materials is on visions/ politics of open source developer communities, their practices & politics, their relation to both the state and capital and how cultural differences play into all that – comparing Europe, Asia and the US. Here’s the link to the session.

- Second, I’m involved in the session “building digital public sector” with our research on the “AMS algorithm” (together with Doris Allhutter, Florian Cech, Fabian Fischer & Gabriel Grill). We’re presenting a sociotechnical analysis of the algorithm and its biases & implications for social practices. Here’s the link to the session & here’s the link to our article for further information.

- Finally, Christian Katzenbach and I have submitted a paper to the session “lost in the dreamscapes of modernity?” that covers the main arguments of our editorial to the special issue in NM&S on future imaginaries in the making and governing of digital technology (which is currently in press and will hopefully be published soon!). The editorial argues that sociotechnical imaginaries should not only be seen as monolithic, one-dimensional and policy-oriented, but also as multiple, contested and commodified; especially in the field of digital tech. Here’s the link to the session.

If you’re registered for the conference you can find the links to the ZOOM meetings right next to the sessions.

Digitale Weichenstellungen in der Krise

Here’s the link to my Standard blog post on COVID-19 & digitalization (in German); the teaser reads as follows:

E-Learning-Plattformen und Videokonferenz-Tools haben sich in unserem Alltag eingenistet. Kaum ein Haushalt, der sich dem noch entziehen kann. Dennoch dürfen grundlegende technopolitische Fragen nicht aus den Augen verloren werden.

algorithmic profiling

I’m very happy that our article “Algorithmic Profiling of Job Seekers in Austria: How Austerity Politics Are Made Effective” by Doris Allhutter, Florian Cech, Fabian Fischer, Gabriel Grill and me (from the ITA, TU Wien, University of Michigan) is published now!! 🙂 It’s part of the special issue “Critical Data and Algorithm Studies” in the open access journal Frontiers in Big Data edited by Katja Mayer and Jürgen Pfeffer! Thanks Katja for a speedy review process!! Surprisingly, the article triggered quite some resonance within the academic, but also in the public sphere. Lot’s of journalists etc got interested in this “first scientific study” on “the AMS Algorithm”. Since we’re currently working on an additional study comprising a deeper analysis of our own materials (including our own data inquiry to the AMS), we’re not able to talk much about this paper in public at this specific moment. But new insights will follow by the end of Mai or mid-June at the latest, so keep posted!!! Here’s the project description of the current study funded by the Arbeiterkammer OÖ.

I’m very happy that our article “Algorithmic Profiling of Job Seekers in Austria: How Austerity Politics Are Made Effective” by Doris Allhutter, Florian Cech, Fabian Fischer, Gabriel Grill and me (from the ITA, TU Wien, University of Michigan) is published now!! 🙂 It’s part of the special issue “Critical Data and Algorithm Studies” in the open access journal Frontiers in Big Data edited by Katja Mayer and Jürgen Pfeffer! Thanks Katja for a speedy review process!! Surprisingly, the article triggered quite some resonance within the academic, but also in the public sphere. Lot’s of journalists etc got interested in this “first scientific study” on “the AMS Algorithm”. Since we’re currently working on an additional study comprising a deeper analysis of our own materials (including our own data inquiry to the AMS), we’re not able to talk much about this paper in public at this specific moment. But new insights will follow by the end of Mai or mid-June at the latest, so keep posted!!! Here’s the project description of the current study funded by the Arbeiterkammer OÖ.

media coverage

Last year, my work has been covered by various media outlets and events. First, the Austrian Academy of Sciences (ÖAW) made a portrait/ interview with me on the way visions and values shape search engines as part of their series “Forschen für Europa”. This piece included a fancy foto shooting, as you can see here. Second, I was invited to take part in the panel discussion of the ORF Public Value event “Occupy Internet. Der gute Algorithmus” (together with Tom Lohninger from epicenter.works, Matthias Kettemann from the Hans-Bredow-Institut and Franz Manola from the ORF Plattformmanagement). The live discussion took place at the “Radiokulturhaus” and was aired in ORF 3 thereafter. Here you can find the abstract, the press release and the video in case you want to watch the whole discussion. Finally, I was invited as a studio guest to the radio broadcast “Punkt 1” at Ö1 “Das eingefärbte Fenster zur Welt“, where I spoke about alternative search engines and people could phone in and ask questions per email. Talk radio it is! 😉 – all in German.

Last year, my work has been covered by various media outlets and events. First, the Austrian Academy of Sciences (ÖAW) made a portrait/ interview with me on the way visions and values shape search engines as part of their series “Forschen für Europa”. This piece included a fancy foto shooting, as you can see here. Second, I was invited to take part in the panel discussion of the ORF Public Value event “Occupy Internet. Der gute Algorithmus” (together with Tom Lohninger from epicenter.works, Matthias Kettemann from the Hans-Bredow-Institut and Franz Manola from the ORF Plattformmanagement). The live discussion took place at the “Radiokulturhaus” and was aired in ORF 3 thereafter. Here you can find the abstract, the press release and the video in case you want to watch the whole discussion. Finally, I was invited as a studio guest to the radio broadcast “Punkt 1” at Ö1 “Das eingefärbte Fenster zur Welt“, where I spoke about alternative search engines and people could phone in and ask questions per email. Talk radio it is! 😉 – all in German.